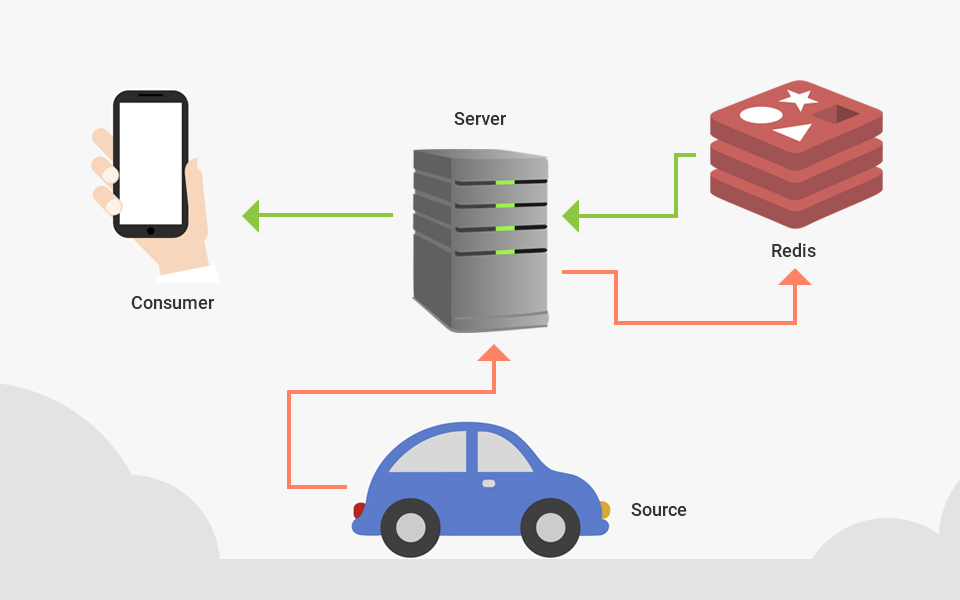

Ever wondered how Uber, Olay, Swiggy and Zomato does real-time tracking? Stuck with performance issues related to real-time tracking? Then this is the right article for you. I will try to design a simple yet effective system that can handle the load and scale with ease. I will be using my favourite caching server, Redis. You can choose any caching server of your choice that supports clustering. I won’t be writing any codes, as the objective of this article is system designing.

Before we begin let’s see common bottlenecks in real-time tracking systems,

- Writing information from the source to persistence storage.

- Reading data from persistence storage and transmitting it to the client.

- Handling a high volume of requests.

Create a tracking session

It’s always better to create a tracking session and decouple it from all other authorization circus we do for various APIs, this helps in reducing compute time. This can be handled in two ways:

- Create a source id for the source, data from the source will be identified using this source id. Add it to Redis like this:

{ "sources":{ "source-id":"" } }(I am using HSET, source id doesn’t have any data associated with it, but you can add anything you want)

Now create another client-id for the client and map it to the source tracking id like this:

{ "client-source":{ "client-id":"source-id" } }save this to Redis(I am using an HSET). Never share the source-id with the client.

- Create a single tracking session, share it with the source and the client, the source will PUT data using this session-id while the client will GET data using this session id. Make sure the client can never do a PUT request with the session id, I would only recommend this when the source is in localhost or under an intranet.

Sample data from the source:{ "tracking-id":"data from source" }I recommend using PSETEX in Redis where you can specify an expiry, this automatically removes data from the Redis server when it expires.

Putting data from the source

Requests can be handled multiple ways, the most performant one is WebSockets or Streams instead of using multiple requests. This can help reduce the latency involved in multiple handshakes while establishing the connections. You can then make the system event-driven using reactive frameworks. But using multiple requests won’t hurt, unless adding a few ms to your response time is unacceptable. But make sure your client doesn’t ‘DoS’ your server, achieve this by adding some randomness to the request frequency.

For the sake of simplicity let’s use plain HTTP requests, here is a sample request:

PUT

Request header

source-id:9bcd39fe-b10a-46c4-b9c2-e6ebf8022f30

Request Body

27.173891:78.042068

Authenticate the request by checking if the source-id exists in Redis in the place where we save it, I usually use HGET and check whether the result is nil or null, it’s faster than string comparisons in most languages. Let’s move on to persisting the data, it’s always better if we can save the request just like that and send it directly to the client without much processing. Unless you have to do some complicated business logic on the received data. It’s not necessary to follow JSON standard or XML standard as validating such structures takes computation time, although it’s arguable whether to save time like this. But the goal here is to reduce latency and save compute time.

Save the data to the Redis like this:

{

"9bcd39fe-b10a-46c4-b9c2-e6ebf8022f30":"27.173891:78.042068"

}

I recommend using Redis command PSETEX as we don’t want data in here past the point where it becomes irrelevant. Also, When the new data is received the old data is replaced. The sole purpose of the Redis is to keep only the latest relevant data.

If you want to log this data, then you can push it to some messaging servers like Apache Kafka and handle it somewhere else. Make sure you don’t keep this request thread busy.

As response just sending a status code will suffice, try not to spend much time creating a response message, this also saves a KB or two, which when scaled to millions of requests, is a big deal!.

Getting data to the client

Now the sole purpose of this API is to get the current update from the source and not the entire history. Most applications don’t need the entire history all the time, if old data is relevant to your application call another API first and then populate the data in the application. This is where the above-mentioned logging part comes in handy. Call this API to get the latest update.

Don’t fetch historical data to the client application unless necessary, for an application like Uber the location history is only valid to you for record-keeping, the client while tracking the location of the driver only needs the latest location, you can even plot path using data available in the front end from the past API calls.

Here is a sample request:

GET

Request header

client-id:ce3c6f66-ada3-45cd-b9aa-e9baea1f9b8d

Fetch the source-id using the client-id from Redis, now use this source-id to fetch the data we saved in Redis, I recommend using GET Redis command with key as source-id to fetch the data. As I have mentioned before, try not to do any manual processing here, you can send data from Redis directly as the response. This may look like a shady practice but unless there is a bug in either the source or client (this shouldn’t happen in the first place), it shouldn’t cause any issues.

Always try to keep the response on point, if you need location as a response, send only the longitude and latitude. If you can manage something like “latitude: longitude” as a response, it can save you a lot in bandwidth and time (when scaled to millions of requests obviously!)

Here is a sample response:

27.173891:78.042068

Handling a high volume of requests

First of all, Redis supports clustering so you don’t need to do much to scale Redis. Secondly, try to choose a server framework that manages threads smartly, I would recommend GoLang, but NodeJs works too.

It’s fine to keep the two API’s mentioned above as a separate project written using the above suggestions, you don’t have to necessarily port everything.

Result

This is a simple server that uses the classic request/response methodology to transfer data. But by using reactive frameworks and utilizing the publish-subscribe support of Redis, we can make an event-driven system. Event-driven systems are more efficient but need a lot more attention and a really good set of frameworks to achieve the performance improvement you would expect.

But for now, if you followed everything as I suggested, your response time should be under 50-100 ms. Redis usually won’t take past 1-10ms to fetch the data, we only make two calls, that shouldn’t be more than 20ms, rest of it is the time taken by your server to process the result and send a response. Now I know I made some controversial design decisions like saving data from the source just like that and sending unstructured data to the client, you can always add it. But mind that parsing JSON can be computation-intensive for languages like Java, even when you avoid any libraries and do it manually, there will be a lot of string processing.